WFA and Platforms Make Major Progress to Address Harmful Content

- Press Release

- September 23, 2020

- WFA

Global Alliance for Responsible Media (GARM) agrees commitments and timeframe for the first global brand safety and sustainability framework for the advertising industry.

Facebook, YouTube and Twitter, in collaboration with marketers and agencies through the Global Alliance for Responsible Media have agreed to adopt a common set of definitions for hate speech and other harmful content and to collaborate with a view to monitoring industry efforts to improve in this critical area.

The changes follow 15 months of intensive talks within GARM between major advertisers, agencies and key global platforms, with the first changes to be introduced this month. GARM is a cross-industry initiative founded and led by the World Federation of Advertisers (WFA) and supported by other trade bodies, including ANA, ISBA and the 4A’s.

Four key areas for action have been identified, designed to boost consumer and advertiser safety with agreed individual timelines for each platform to implement across the different areas.

The key areas of agreement are (details below):

- Adoption of GARM common definitions for harmful content;

- Development of GARM reporting standards on harmful content;

- Commitment to have independent oversight on brand safety operations, integrations and reporting;

- Commitment to develop and deploy tools to better manage advertising adjacency.

“The issue of harmful content online has become one of the challenges of our generation. As funders of the online ecosystem, advertisers have a critical role to play in driving positive change and we are pleased to have reached agreement with the platforms on an action plan and timeline in order to make the necessary improvements. A safer social media environment will provide huge benefits not just for advertisers and society but also to the platforms themselves.”

Stephan Loerke WFA CEO

WFA believes that the standards should be applicable to all media given the increased polarisation of content regardless of channel, not just the digital platforms. As such, it encourages members to apply the same adjacency criteria for all their media spend decisions irrespective of the media.

Common definitions will create a common baseline on harmful content.

Today, advertising definitions of harmful content vary by platform and that makes it hard for brand-owners to make informed decisions on where their ads are placed, and to promote transparency and accountability industry-wide.

GARM has been working on common definitions for harmful content since November and these have been developed to add more depth and breadth pertaining to specific types of harm such as hate speech and acts of aggression and bullying.

All platforms will now consistently enforce these standards as part of their advertising content standards and consistently enforce the common definitions.

Harmonized reporting will drive better behaviours.

Today, each platform has its own methodologies to measure the occurrence of harmful content. There is a need to harmonize those methodologies and to focus on metrics that are truly meaningful from a brand and a societal perspective, namely how we measure and quantify the presence harmful content per platform.

Having a harmonized, reporting framework is a critical step to ensure that policies around harmful content are enforced effectively. All parties have now agreed to pursue a set of harmonised metrics on issues around platform safety, advertiser safety, platform effectiveness in addressing harmful content.

Between September and November work will continue to develop a set of harmonize metrics and reporting formats, for approval and adoption in 2021.

Independent audits will drive better implementation and build trust.

With the stakes so high, brands, agencies, and platforms need an independent view on how individual participants are categorising, eliminating, and reporting harmful content. A third-party verification mechanism is critical to driving trust among all stakeholders.

The goal is to have all major platforms audited for brand safety or have a plan in place for audits by year end.

Advertising adjacency solutions are necessary and will be developed.

Advertisers need to have visibility and control so that their advertising does not appear adjacent to harmful or unsuitable content and take corrective action if necessary and to be able to do so quickly.

GARM is working to define adjacency with each platform, and then develop standards that allow for a safe experience for consumers and brands. Platforms that have not yet implemented an adjacency solution will have a roadmap by year-end. Platforms will provide a solution through their own systems, via third party providers or a combination thereof.

“We are delighted that GARM has made such significant progress in such a short period of time. I know these discussions have not been easy but these solutions when implemented, will offer more choice and control for advertisers and their agencies by supporting content that aligns with their values.”

Raja Rajamannar CMCO at Mastercard and WFA President

“This is a significant milestone in the journey to rebuild trust online. Unilever has long championed a responsible and safe online environment through Unilever’s Responsibility Framework and as founding members of GARM, we are encouraged by the acceleration and focus to come together as an industry and agree on these four key areas of action. The issues within the online ecosystem are complicated, and whilst change doesn’t happen overnight, today marks an important step in the right direction.”

Luis Di Como Executive Vice President, Global Media, Unilever

“This is a meaningful milestone in our work with GARM and part of a longer journey that started over 18 months ago. Thanks to the uncommon collaboration of GARM’s diverse membership, we now have a time-bound roadmap for the development of foundational standards, definitions and reporting practices across social media platforms which will help make social media an experience that is safer for everyone, consumers and brands alike. This is not a declaration of victory as there is much work to be done and we rely on all of our platform partners to follow through on their commitments with the pace and urgency these issues demand. Nevertheless, this is an important step in making social media a safer place for society and it’s important to recognise the progress and build further momentum as a result.”

Jacqui Stephenson Global Responsible Marketing Officer, Mars

"This uncommon collaboration, brought together by the Global Alliance for Responsible Media, has aligned the industry on the brand safety floor and suitability framework, giving us all a unified language to move forward on the fight against hate online."

Carolyn Everson VP Global Marketing Solutions, Facebook

"We continue to be committed to aligning on industry standards and frameworks that will help address harmful content and create a brand-safe environment for advertisers. We are proud of the progress we have made in partnership with GARM and the other members to implement changes we believe will help create a place for healthy public conversation."

Sarah Personette VP, Global Client Solutions, Twitter

“Responsibility is our #1 priority, and we are committed to working with the industry to build a more sustainable and healthy digital ecosystem for everyone. We have been actively engaged with GARM since its inception to help develop industry-wide standards for how to commonly address content that is not suitable for advertising. We're excited to have reached this important milestone.”

Debbie Weinstein Vice President, Global Solutions, YouTube

"The ANA applauds the substantive and meaningful progress achieved through a unique and distinctive collaboration among platforms, marketers, agencies and trade bodies. Today, we take an important, if not momentous step to making brands safe and consumers safer – free from all hateful and harmful speech. While much more work lies ahead, this announcement represents a significant leap forward in our journey towards a brand safe ecosystem."

Bob Liodice President and CEO, ANA

"The safety of social media platforms for users has become the primary concern of all responsible advertisers. ISBA strongly backs the goals of the Global Alliance, having long argued that advertisers have the right to demand clarity in community standards and reassurance as to the effectiveness of their enforcement. This announcement is a significant step towards these goals, raising the bar for the industry through the agreement to consistency in standards and independently verified reporting."

Phil Smith Director General, ISBA

"The significant progress of GARM reflects the consumer imperative to ensure a safe media environment. By focusing on consistency and accountability, the team simplified the assessment of platforms ensuring advertisers and agencies can better assess the impact of harmful content. This work reflects the impact that industry leaders have when aligning on a common approach to ensure a better environment and experience for the most important constituent: the consumer."

Marla Kaplowitz President & CEO, 4A's

"The efforts made in the past year to align the industry on 11 harmful content definitions are unprecedented. We now must push forward to operationalize the model to drive predictability and consistency in identifying harmful content and build the tools to avoid spreading harm and having it associated with brands."

Joe Barone Managing Partner, Brand Safety Americas, GroupM

As originally written on the WFA website.

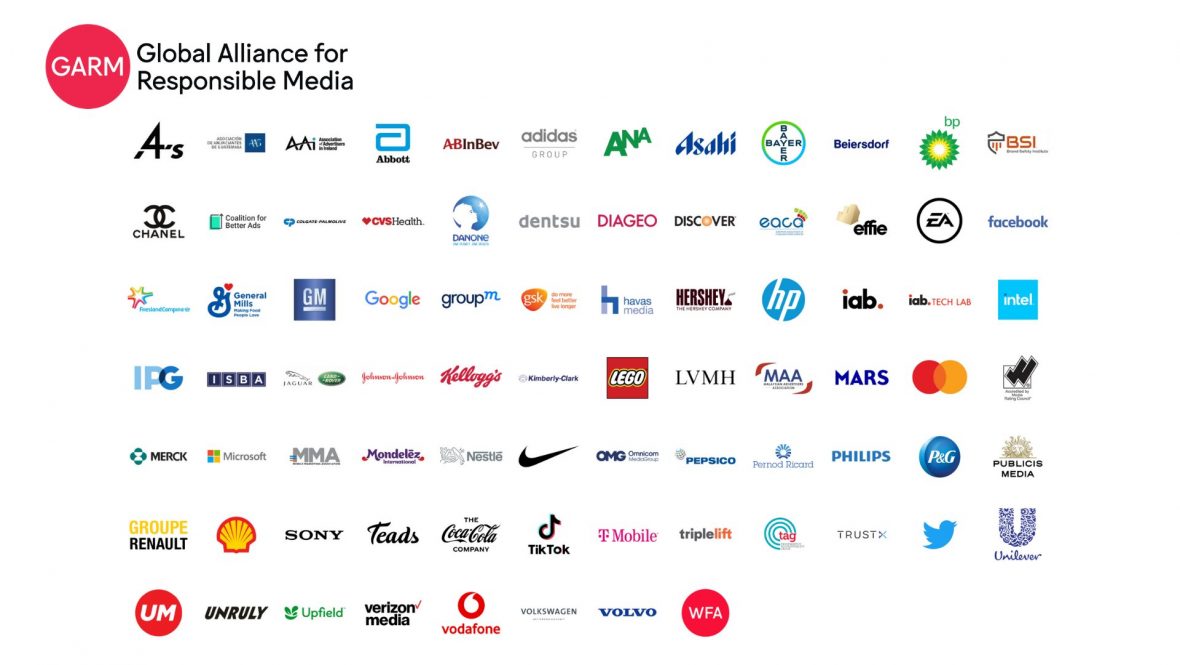

The Global Alliance for Responsible Media (GARM) is an initiative led by WFA and brings together advertisers, agencies, media companies, platforms and industry organisations to improve digital safety. Members of the Global Alliance for Responsible Media recognise the role that advertisers can play in collectively pushing to improve the safety of online environments. Together, they are collaborating with publishers and platforms to do more to address harmful and misleading media environments; and to develop and deliver against a concrete set of actions, processes and protocols for protecting brands.